[Cross posted to Quantified Self]

QS Boston Meetup #5 was held on Wednesday on the topic "The Science of Sleep," a subject that comes up here regularly. The event was major success and, to my mind, demonstrated powerfully the potential of the self-experimentation movement and the exceptional people making it happen. Here is a brief recap of the evening, with my comments on what was discussed. A big thanks to Zeo for their generous support of the meeting, QS Boston leader Michael Nagle, and sprout for hosting the event.

Experiment-in-action: A participatory Zeo sleep trial

Michael put the theme into action uniquely by arranging for a free 30-day trial of Zeo sleep sensors to any members who were interested in experimenting with it and willing to give a short presentation about their results. Over a dozen people participated, and the talks were a treat that stimulated lots of discussion. I thought this was an excellent use of the impressive members of this community, as the talks demonstrated.

Steve Fabregas

Zeo research scientist Steve Fabregas kicked off the meetup by explaining the complex mechanisms of sleep, and the challenges of creating a consumer tool that balances invasiveness, fidelity, and ease of use. He talked about Zeo's initial focus (managing Sleep inertia by waking you up strategically), which - in prime startup fashion - developed into the final product. Steve also gave a rundown of the device's performance, including the neural network-based algorithm that infers sleep states from the noisy raw data, something he said that even humans have trouble with. There were lots of questions afterward, including about their API and variations in data based on age and gender. All in all, a great talk.

Sanjiv Shah

Sanjiv Shah

Sanjiv started out the sleep trial presentations with a lively talk about the many experiments he's done to improve his sleep, including a pitch-black room, ear plugs, and no alcohol or caffeine. But the biggest surprise (to him and us) was his discovery of how a particular color of yellow glasses, worn three hours before bed, helped his sleep dramatically. This is apparently based on research into the sleep-disturbing frequencies of artificial light. He shared how wearing these also helped reduce jet lag. The talk was a hit, with folks clamoring to know where to get the glasses. I found this page helpful in understanding the science. (An aside: If you're interested in trying these out in a group experiment, please let me know. I am definitely going to test them.)

Adriel Irons

Adriel studied the impact on weather and his sleep (via the Zeo's calculated ZQ) by recording things like temperature, dew point, and air pressure. He concluded that there's a possible connection between sleep and changes in those measures, but he said he needs more time and data. Audience questions were about measuring inside vs. outside conditions, sunrise and sunset times, and cloudiness.

Susan Putnins

Susan tested the effect of colored lights (green and purple) on sleep. Her conclusion was that there was no impact. As a surprise, though, she made a discovery about a the side-effects of a particular medication: none! This is a fine example of what I call the serendipity of experimentation.

Eric Smith

Eric tried a novel application of the Zeo: Testing it during the day. His surprise: The device mistakenly thought he was asleep a good portion of his day. He got chuckles reflecting on Matrix-like metaphysical implications, such as "Am I really awake?" and "Am I a bizarre case?" His results kicked off a useful discussion about the Zeo's algorithms and the difficulty of inferring state. Essentially, the device's programming is trained on a particular set of individuals' data, and is designed to be used at night. Fortunately, the consensus was that Eric is not abnormal.

Jacqueline Thong

Jacqueline finished up the participatory talks with her experiment to test whether she can sleep anywhere. Her baseline was two weeks sleeping in her bed, followed by couch then floor sleep. Her conclusion was that her sleep venue didn't seem to matter. One reason I liked Jacqueline's experiment is that, like many experiments, surprises are so rich and satisfying. Think bread mould. She said more data was needed, along with more controls. Sadly, she wondered whether her expensive mattress was worth it. Look for it on eBay.

Matt Bianchi

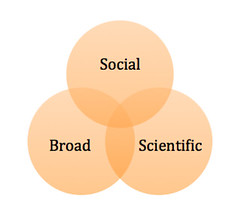

Matt Bianchi, a sleep doctor at Mass General, finished out the meetup with a discussion of the science and practice of researching sleep. Pictures and a description of what what a sleep lab is like brought home the point that what is measured there is not "normal" sleep: 40 minutes of setup and attaching electrodes, 200' of wires, and constant video and audio monitoring make for a novel $2,000 night. He said these labs give valuable information about disorders like sleep apnea, and at the same time, what matters at the end of the day is finding something that works for individuals. Given the multitude of contributing factors (he listed over a dozen, like medications, health, stress, anxiety, caffeine, exercise, sex, and light), trying things out for yourself is crucial. He also talked about the difficulties of measuring sleep, for example the unreliability of self-reported information. This made me wonder about the limitations of what we can realistically monitor about ourselves. Clearly tools like Zeo can play an important role. Questions to him included how to be a wake more (a member said "I'm willing to be tired, but not to die sooner,") to which he replied that the number of hours of sleep each of us needs varies widely. (The eight hour guideline is apparently "junk.")

Matt's talk brought up a discussion around the relative value of exploring small effects. The thought is that we should look for simple changes that have big results, i.e., the low hanging fruit. A heuristic suggested was if, after 5-10 days, you're not seeing a result, then move on to something else. A related rule might be that the more subtle the data, the more data points you need. I'd love to have a discussion about that idea, because some things require more time to manifest. (I explored some of this in my post Designing good experiments: Some mistakes and lessons.)

Finally, Matt highlighted the importance of self-experimentation. The point was that large trials result in learning what works for groups of people, but the ultimate test is what works for us individually. (He called this "individualizing medicine.") This struck a chord in me, because the enormous potential of personal experimenting is exactly what's so exciting about the work we're all doing here. All in all, a great meetup.

[Image courtesy of Keith Simmons]

(Matt is a terminally-curious ex-NASA engineer and avid self-experimenter. His projects include developing the Think, Try, Learn philosophy, creating the Edison experimenter's journal, and writing at his blog, The Experiment-Driven Life. Give him a holler at matt@matthewcornell.org)

Saturday, July 2, 2011 at 1:05PM

Saturday, July 2, 2011 at 1:05PM  I recently received an email from someone having trouble keeping up with her experiment. While there is lots of general advice about discipline and motivation, this got me thinking about how doing personal experiments might differ. Following are a few brief thoughts, but I'd love to hear ways that you keep motivated in your quantified self work.

I recently received an email from someone having trouble keeping up with her experiment. While there is lots of general advice about discipline and motivation, this got me thinking about how doing personal experiments might differ. Following are a few brief thoughts, but I'd love to hear ways that you keep motivated in your quantified self work.